Adversarial Tracking: Attacking 3D Multi-Object Tracking in Real Time

Han Wu, Dr. Johan Wahlström and Dr. Sareh Rowlands

Monocular 3D Object Tracking

Step 0: The Ground Truth (Training Set)

Step 1: 2D Object Detection (FRCNN)

Step 2: 3D Object Detection (Orientation)

Step 3: Motion Tracking (LSTM + GPS / IMU)

- We do not use Lidar Data (No depth information)

- Not easy to label 3D bounding boxes by human. (No training set)

Step 0: How can we generate 3D Bounding Boxes?

(Ground Truth / Training set)

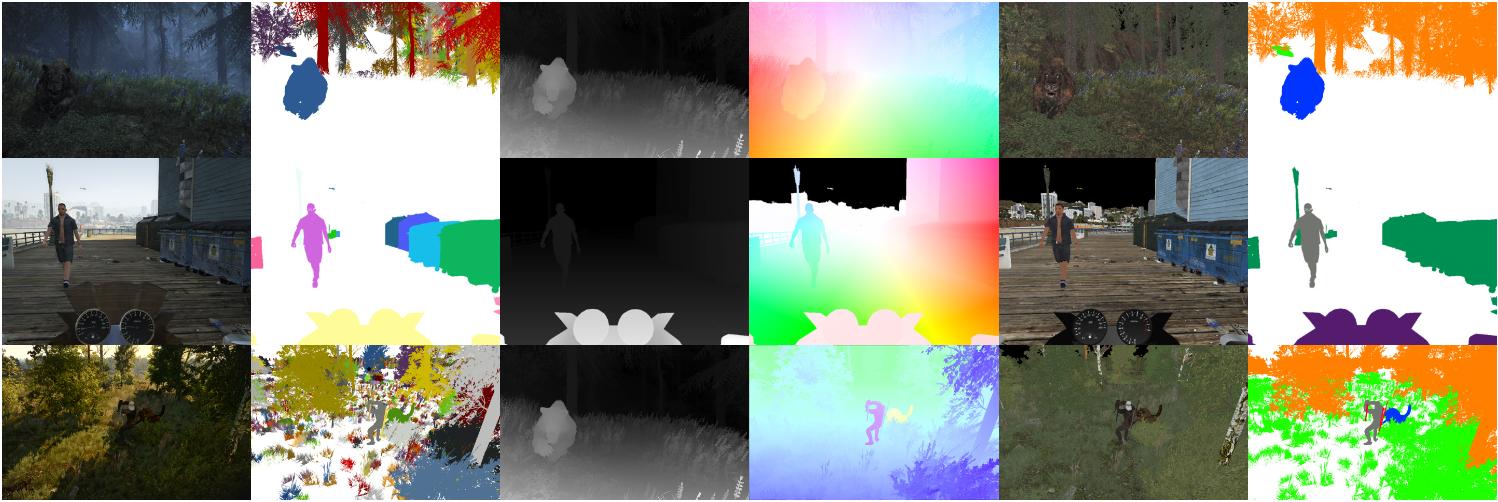

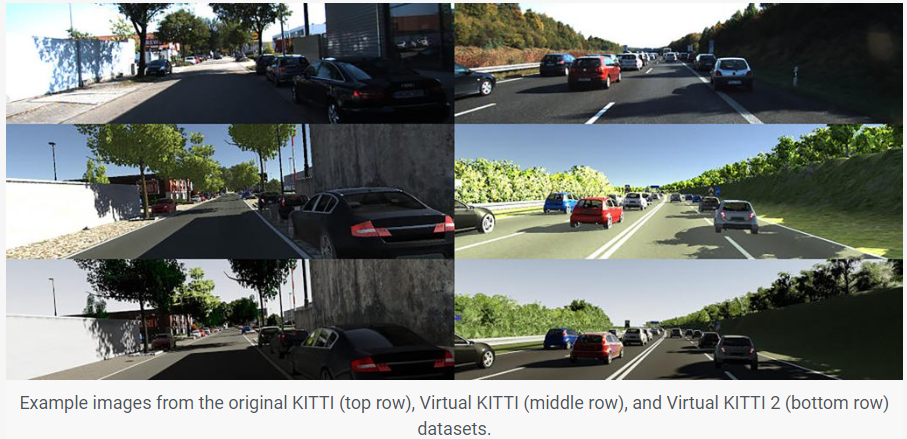

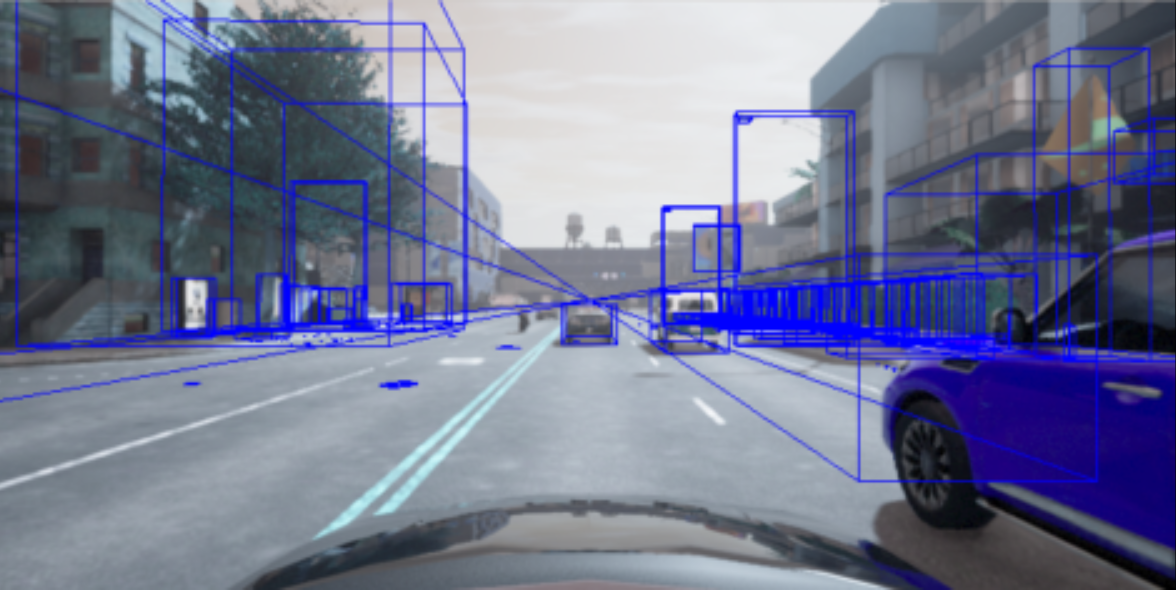

Method 1: Detouring - Extract rendering resources from Commercial Games (Direct3D 11)

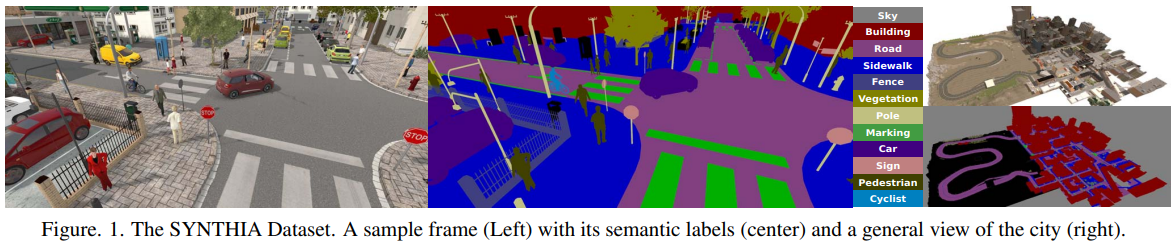

Method 3: Develop a Simlator using Game Engine (Unreal)

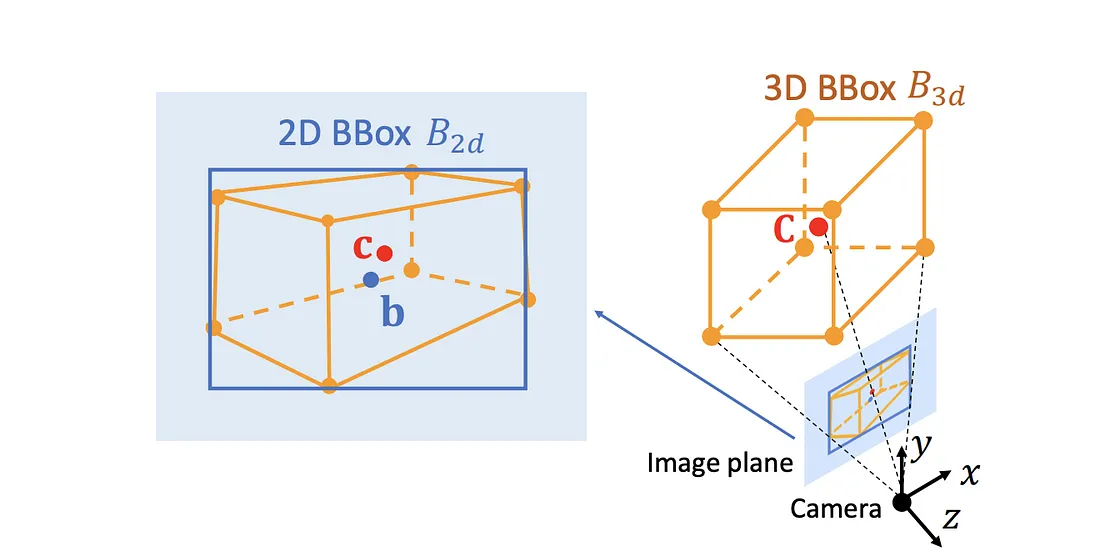

4 DoF - (x, y) (w, h)

7 DoF - (x, y, z) (w, h, l) and local yaw

CARLA API Tutorial [Blog Post]

Step 0: From 3D global coordinates to 2D image coordinates

(Ground Truth / Training set)

Camera Projective Geometry

Projection from world coordinates (x, y, z) to image coordinates (w, h):

$O_{image} = K[R|t] O_{world}\ \rightarrow\ O_{image}^{'} = KM^{'}[R|t] O_{world}$

where $M^{'} = \begin{bmatrix} cos(\pi) & -sin(\pi) & 0\\ sin(\pi) & cos(\pi) & 0\\ 0 & 0 & 1\\ \end{bmatrix} = \begin{bmatrix} -1 & 0 & 0\\ 0 & -1 & 0\\ 0 & 0 & 1\\ \end{bmatrix}$ for vehicles partially behind the camera (truncation).

- Intrinsic Matrix: $K = \begin{bmatrix} f & 0 & \frac{w}{2}\\ 0 & f & \frac{h}{2} \\ 0 & 0 & 1 \end{bmatrix} $ where $f = \frac{w}{2.0 * tan(fov\ *\frac{\pi}{360})}$

- Extrinsic Matrix: $[R | t] = \begin{bmatrix} cos(k) & -sin(k) & 0 & x\\ sin(k) & cos(k) & 0 & y\\ 0 & 0 & 1 & z \\ 0 & 0 & 0 & 1 \\ \end{bmatrix}^{-1}$

$\begin{aligned}O_{image}^{'} &= KM^{'}[R|t] O_{world}\\ &= K\begin{bmatrix} -1 & 0 & 0\\ 0 & -1 & 0\\ 0 & 0 & 1\\ \end{bmatrix}\ [R|t] = \begin{bmatrix} f & 0 & \frac{w}{2}\\ 0 & f & \frac{h}{2} \\ 0 & 0 & 1 \end{bmatrix} \begin{bmatrix} -1 & 0 & 0\\ 0 & -1 & 0\\ 0 & 0 & 1\\ \end{bmatrix} [R|t] = \begin{bmatrix} -f & 0 & \frac{w}{2}\\ 0 & -f & \frac{h}{2} \\ 0 & 0 & 1 \end{bmatrix} [R|t] = K^{'}\ [R|t]\end{aligned}$

Step 1: 2D Object Detection

(YOLO / Faster RCNN / SSD)

Step 2: 3D Object Detection

(2D Bounding Boxes --> 3D Bounding Boxes --> 3D Global Coordinates)

From 2D Bounding Boxes to 3D Bounding Boxes

From 3D Bounding Boxes to 3D Global Coordinates

$O_{image}^{'} = M^{'}K[R|t] O_{world} = [u\ v\ z]^{T} = [\frac{u}{z}\ \frac{v}{z}\ 1]^{T} = [x\ y\ 1] \neq [u\ v\ z]^{T}$

The depth information is missing!

Step 3: Multi-Object Motion Tracking

(Monocular 3D Object Tracking)

Joint Monocular 3D Vehicle Detection and Tracking: https://eborboihuc.github.io/Mono-3DT/

Monocular Quasi-Dense 3D Object Tracking: https://eborboihuc.github.io/QD-3DT/

Requires GPS or IMU sensor to calculate the extrinsic matrix [R|t].

Step 4: Universal Adversarial Perturbation

(Adversarial Attacks)

Thanks